Batch scripts are a rudimentary form of scripting language which runs on windows shell. It's usually written in notepad and need be saved with a .bat extension. it's mostly used for handling windows functions and making windows tasks automatic so you don't need to do them manually. It's a procedural language which relies heavily on goto and marks :

The most basic of batch script can be:

@echo off

title grotesquely simple batch script

echo this is a sample batch file

pause

::use @echo off to signal that you don't want the code to show in the actual compiled programme

:: title- the title displayed at the title bar

:: echo . grapahical representation of text

::pause allows stills the screen. without it the programme would run in a blink without enough time to read it

Even though it's mostly used for windows-based chores, it supports code for applications common in mainstream programming languages, like simple calculations:

@echo off

title addition

echo input a number

set /p x=

echo input another number

set /p y=

set /a result=x+y

echo their sum is %result%

pause

::set /p = prompts user's input. set /p x= means that the user's input will be stored on the x variable

::set /a result=x+y means that we are defining a variable named result that is going the the result ensuing from ::the sum of x plus y. Note that we use set when defining variables. and /a tells the compiler that we are ::handling numbers rather than strings

Even though batch scripting doesn't support power functions, it's entirely possible to write code to perform exponential maths

@echo off

title power fubction

:start

cls

echo input power base

set /p x=

echo input power exponent

set /p n=

set result=1

for /L %%i IN (1,1,%n%) do SET /a result*=x

echo result is %result%

pause

goto start

Html/Javascript widget

Friday, 11 December 2015

Saturday, 28 November 2015

Acitivity on Arrow and Acitivity on Node Diagram

Project Network Diagrams

A

project network illustrates the relationships between activities / tasks

in the project. Showing the activities as nodes or on arrows between

event nodes are two main ways to draw those relationships.

Two types of Network Logic:-

v Activity on Arrow (AOA)

v Activity on Node (AON)

Activities on Arrow diagrams are used to show only the start and end of the activties.

Figure 1 : Activity on Arrow (AOA)

• Arcs = only indicate activities

• Nodes = events (activity start or end)

Activity

on Node (AON) diagrams place the activity on the node with interconnection arrows showing the dependency relationships within the activities. Since the activity is on a node, the emphasis (and

more data) usually can be placed on the activity.

Figure 2 : Activity on Node (AON)

• Arcs = dependencies between activities. (Events not directly represented)

• Nodes = activities

The main difference between AOA & AON is AOA diagrams emphasize the milestones (events); AON networks emphasize the tasks.

Activity on Arrow Advantages:

· An arrow denotes passage of time and therefore is better suited (than a node) to represent a task.

· Scheduling (manually) on an AOA diagram is easier than on an AON diagram.

· In general, AOA needs fewer arrows than AON and therefore the network will be more compact and clear.

Activity on Arrow Disadvantages

· The

AOA network can only show finish-to-start relationships. It simply is

not possible to show lead and lag except by adding or subtracting times,

which makes project tracking difficult if not impossible.

· There

are instances when “dummy” activities can occur in an AOA network.

Dummy activities are activities that show the dependency of one task on

other tasks but for other than technical reasons. For example, a task

may be dependent on another because it would be more cost effective to

use the same resources for the two; otherwise, the two tasks could be

accomplished in parallel. Dummy activities do not have durations

associated with them; they simply show that a task has some kind of

dependence on another task.

· AOA

diagrams are not as widely used as AON simply because the latter are

somewhat simpler to use and all project management software programs can

accommodate AON networks, where as not all can accommodate AOA

networks.

Activity on Node Advantages:

· AON does not have dummy activities since the arrows only resent dependencies.

· AON can accommodate any kind of task relationship, including:

- Finish to start

- Finish to finish

- Start to start

- Start to finish

- Lead and lag

· AON is the most widely used network - diagramming method today and all project management software supports it.

Activity on Node Disadvantages:

· AON diagram do not clearly show time line for a project.

· Large networks require dedicated maintenance and analysis.

· Network diagram do not lead themselves to easy reproduction /distribution.In general, because of the serious constraint, Activity-on-Arrow diagrams are now rarely used.

References:

http://theroadchimp.com/tag/activity-on-node/

http://pmstudycircle.com/2012/07/precedence-diagramming-method-activity-on-node-method-scheduling/

https://en.wikipedia.org/wiki/Arrow_diagramming_method

http://www.managementtutor.com/difference-between/difference-between-PDM-and-AOA.html

http://www.syque.com/quality_tools/tools/TOOLS15.htm

Delphi Method

The Delphi method is a systematic method for acquiring feedback from multistage processes which serves as a means to assess future events, trends, techinical developments and the like. This surveying is answered by a panel of experts and the information is narrowed down with each correction of the original answers until consensus is reached as the range of answers decreases.

Delphi is based on the principle that forecasts (or decisions) from a structured group of individuals are more accurate than those from unstructured groups. The technique can also be adapted for use in face-to-face meetings, and is then called mini-Delphi or Estimate-Talk-Estimate (ETE). Delphi has been widely used for business forecasting such as market research and has certain advantages over another structured forecasting approach, prediction markets

Delphi is based on the principle that forecasts (or decisions) from a structured group of individuals are more accurate than those from unstructured groups. The technique can also be adapted for use in face-to-face meetings, and is then called mini-Delphi or Estimate-Talk-Estimate (ETE). Delphi has been widely used for business forecasting such as market research and has certain advantages over another structured forecasting approach, prediction markets

Internet 2

Internet 2 is a not-for-profit United States computer network consortium which started as a project for a faster internet based on optical fiber backbones supporting higher broad bands. It worked under the codename Albilene, named after a 19th century railway from Kansas. The original goal of the internet 2 was the transmission of data at speeds of 2.48 Gbits/s and higher. Since 2004 this default speed has increased to 10 Gbit/s. It's noteworthy that the internet 2, unlike the conventional internet, wasn't designed with militaristic ends in mind. Internet 2 has connected since 1998 about 115 colleges and research institutes. Starting in 2004, it has grown to serve as communication means for over 200 national institutions. INternet2 also works as a sandbox for the development and deployment of features to be later on released for the internet. These technologies include large-scale

network performance measurement and management tools, secure

identity and access management tools and capabilities such as

scheduling high-bandwidth, high-performance circuits.

Friday, 27 November 2015

Service Design IT Security Management

ITIL Security management works best as a built-in feature of a company's Security management since it is far broader in scope than what a standard IT-Service Provider can offer.

ITIL V3 assigns Information Security management as Service Design process, therefore achieving better integration with the rest of the service life cycle stages. In former publications, Security Information used to have its own publication. This process has also been adapted to fit into the new findings and requirements of IT security. ITIL offers an overview of the most important activities involved therein and offers suggestions on how to integrate it with other service management stages.

ITIL Security management comprises the following aspects:

Design of Security Control - design of suitable technical and organisational measures to ensure that assets, information, data and IT-services retain theirreliability, integrity and availability according to the company's needs.

Security tests - ensure that every security mechanism goes through regular checks.

Recovery from security Incidents - find out and learn about attacks and the ensuing damage on business systems and how to minimise damage in light of a successful attack attempt, besides ensuring that

Security Review - go over security measures and procedures to make sure they are in harmony with the perception of risk from the business' point of view.

ITIL V3 assigns Information Security management as Service Design process, therefore achieving better integration with the rest of the service life cycle stages. In former publications, Security Information used to have its own publication. This process has also been adapted to fit into the new findings and requirements of IT security. ITIL offers an overview of the most important activities involved therein and offers suggestions on how to integrate it with other service management stages.

ITIL Security management comprises the following aspects:

Design of Security Control - design of suitable technical and organisational measures to ensure that assets, information, data and IT-services retain theirreliability, integrity and availability according to the company's needs.

Security tests - ensure that every security mechanism goes through regular checks.

Recovery from security Incidents - find out and learn about attacks and the ensuing damage on business systems and how to minimise damage in light of a successful attack attempt, besides ensuring that

Security Review - go over security measures and procedures to make sure they are in harmony with the perception of risk from the business' point of view.

Thursday, 26 November 2015

Port mirroring

Also called SPAN (Switched Port Analysis if on Cisco systems), consists in a switch port sending information about the packets on a network off to another switch port in order to achieve ongoing traffic monitoring to avoid unwanted intrusions. Network engineers or administrators use port mirroring to analyze and debug data or diagnose errors on a network. It helps administrators keep a close eye on network performance and alerts them when problems occur. It can be used to mirror either inbound or outbound traffic (or both) on single or multiple interfaces. Port mirroring allows for accurate network performance measurement of the by means of a protocol analyser on the port receiving the mirrored data, thus analysing each segment f data separately.

Intrusion Detection System (IDS)

Intrusion Detection System is a system designed for the recognition of attacks against either against the host computer or the network. IDS can complement a firewall or run directly on a monitored computer system to increase network security. NIDS is a network security system focusing on the attacks that come from the inside of the network (authorized users).

There are three types of IDS:

Host-based IDS

Network-based IDS

Hybrid IDS

Some systems may attempt to stop an intrusion attempt but this is neither required nor expected of a monitoring system. Intrusion detection and prevention systems (IDPS) are primarily focused on identifying possible incidents, logging information about them, and reporting attempts. In addition, organizations use IDPSes for other purposes, such as identifying problems with security policies, documenting existing threats and deterring individuals from violating security policies. IDPSes have become a necessary addition to the security infrastructure of nearly every organization.

There are three types of IDS:

Host-based IDS

Network-based IDS

Hybrid IDS

Some systems may attempt to stop an intrusion attempt but this is neither required nor expected of a monitoring system. Intrusion detection and prevention systems (IDPS) are primarily focused on identifying possible incidents, logging information about them, and reporting attempts. In addition, organizations use IDPSes for other purposes, such as identifying problems with security policies, documenting existing threats and deterring individuals from violating security policies. IDPSes have become a necessary addition to the security infrastructure of nearly every organization.

Wednesday, 25 November 2015

Kerberos

Kerberos is a distributed authentication service protocol for open and unsafe networks. Kerberos offers a safe authentication environment on an otherwise unsafe TCP/IP networ and works on the basis of 'tickets' to allow nodes communicating over a

non-secure network to prove their identity to one another in a secure

manner. Kerberos protocol messages are protected against eavesdropping and replay attacks. Kerberos builds on symmetric key cryptography and requires a trusted third party,

and optionally may use public-key cryptography during certain phases of

authentication.

The client authenticates itself to the Authentication Server (AS) which forwards the username to a key distribution center (KDC). The KDC issues a ticket-granting ticket (TGT), which is time stamped, encrypts it using the user's password and returns the encrypted result to the user's workstation. This is done infrequently, typically at user logon; the TGT expires at some point, though may be transparently renewed by the user's session manager while they are logged in.

When the client needs to communicate with another node ("principal" in Kerberos parlance) the client sends the TGT to the ticket-granting service (TGS), which usually shares the same host as the KDC. After verifying the TGT is valid and the user is permitted to access the requested service, the TGS issues a ticket and session keys, which are returned to the client. The client then sends the ticket to the service server (SS) along with its service request.

The client authenticates itself to the Authentication Server (AS) which forwards the username to a key distribution center (KDC). The KDC issues a ticket-granting ticket (TGT), which is time stamped, encrypts it using the user's password and returns the encrypted result to the user's workstation. This is done infrequently, typically at user logon; the TGT expires at some point, though may be transparently renewed by the user's session manager while they are logged in.

When the client needs to communicate with another node ("principal" in Kerberos parlance) the client sends the TGT to the ticket-granting service (TGS), which usually shares the same host as the KDC. After verifying the TGT is valid and the user is permitted to access the requested service, the TGS issues a ticket and session keys, which are returned to the client. The client then sends the ticket to the service server (SS) along with its service request.

Simple Network Management System

Simple Network Protocol is a network protocol developed by the IETF supposed to monitor and control all network components (Routers, Servers, Swicthes, Printers, hosts ect) from a central sation. It consists of a set of standards for network management, including an

application layer protocol, a database schema, and a set of data

objects. This protocol governs all communication between monitored devices and the monitoring station. SNMP describes the structure of the data packets to be sent off as well as the communication flow. In order to make this task possible, SNMP relies on:

- monitoring of IP network component

- remote control and remote configuration of network component

- error detection and notification

SNMP has by virtue of its simplicity, modularity and versatility established itself as the standard of choice to support both most of the management programmes and the hardware devices on the network.

In typical uses of SNMP one or more administrative computers, called managers, have the task of monitoring or managing a group of hosts or devices on a computer network. Each managed system executes, at all times, a software component called an agent which reports information via SNMP to the manager.

Another way of putting it is thinking of SNMP agents as snitches. Ongoing punks that rat out to the manager.

SNMP agents expose management data on the managed systems as variables. The protocol also permits active management tasks, such as modifying and applying a new configuration through remote modification of these variables, like the environment variables in windows policies which affect all users. The variables accessible via SNMP are organized in hierarchies. These hierarchies, and other metadata (such as type and description of the variable), are described by Management Information Bases (MIBs). It becomes soon obvious how MIBs dictate hierarchies in an attempt to keep the variables sorted in a neat manner. If the 5 S's were to be applied here, MIBs would be tools for 整頓 (Seiton or to sort things out).

An SNMP-managed network consists of three key components:

Managed device

Agent — software which runs on managed devices

Network management station (NMS) — software which runs on the manager

A managed device is a network node that implements an SNMP interface that allows unidirectional (read-only) or bidirectional (read and write) access to node-specific information. Managed devices exchange node-specific information with the NMSs. Sometimes called network elements, the managed devices can be any type of device, including, but not limited to, routers, access servers, switches, cable modems, bridges, hubs, IP telephones, IP video cameras, computer hosts, and printers.

- monitoring of IP network component

- remote control and remote configuration of network component

- error detection and notification

SNMP has by virtue of its simplicity, modularity and versatility established itself as the standard of choice to support both most of the management programmes and the hardware devices on the network.

In typical uses of SNMP one or more administrative computers, called managers, have the task of monitoring or managing a group of hosts or devices on a computer network. Each managed system executes, at all times, a software component called an agent which reports information via SNMP to the manager.

Another way of putting it is thinking of SNMP agents as snitches. Ongoing punks that rat out to the manager.

SNMP agents expose management data on the managed systems as variables. The protocol also permits active management tasks, such as modifying and applying a new configuration through remote modification of these variables, like the environment variables in windows policies which affect all users. The variables accessible via SNMP are organized in hierarchies. These hierarchies, and other metadata (such as type and description of the variable), are described by Management Information Bases (MIBs). It becomes soon obvious how MIBs dictate hierarchies in an attempt to keep the variables sorted in a neat manner. If the 5 S's were to be applied here, MIBs would be tools for 整頓 (Seiton or to sort things out).

An SNMP-managed network consists of three key components:

Managed device

Agent — software which runs on managed devices

Network management station (NMS) — software which runs on the manager

A managed device is a network node that implements an SNMP interface that allows unidirectional (read-only) or bidirectional (read and write) access to node-specific information. Managed devices exchange node-specific information with the NMSs. Sometimes called network elements, the managed devices can be any type of device, including, but not limited to, routers, access servers, switches, cable modems, bridges, hubs, IP telephones, IP video cameras, computer hosts, and printers.

Internet Architecture Board

The INternet Architecture Board (IAB) is a committe which is responisble for providing advisory support for and charged with oversight of the Standardised activities of the INtenet Engineering Task Force (IETF). The IAB was showing increasing interest in the long-term architectural future of the internet, so they set out to oversee the standardised processes to establish the protocol parameter values of the internet as they are known today. It is also in charge of the editorial management and publication of the Request for Comments (RFC) document series.

The body which eventually became the IAB was created originally by the United States Department of Defense's Defense Advanced Research Projects Agency with the name Internet Configuration Control Board during 1979; it eventually became the Internet Advisory Board during September, 1984, and then the Internet Activities Board during May, 1986 (the name was changed, while keeping the same acronym). It finally became the Internet Architecture Board, under ISOC, during January, 1992, as part of the Internet's transition from a U.S.-government entity to an international, public entity.

The body which eventually became the IAB was created originally by the United States Department of Defense's Defense Advanced Research Projects Agency with the name Internet Configuration Control Board during 1979; it eventually became the Internet Advisory Board during September, 1984, and then the Internet Activities Board during May, 1986 (the name was changed, while keeping the same acronym). It finally became the Internet Architecture Board, under ISOC, during January, 1992, as part of the Internet's transition from a U.S.-government entity to an international, public entity.

Monday, 23 November 2015

Text linguistics - outline

Text linguistics is a branch of linguistics whose main study is the text a communication means. The application of text linguistics has evolved from relying on grammar alone to understand the meaning in textual media to a point in which text is viewed in much broader terms that go beyond a mere extension of traditional grammar towards an entire text. Text linguistics takes into account the form of a text, but also its setting, or how it is situated in an interactional, communicative context. Both the author and the adressee are responsible for the understood meaning conveyed in the text. This means that the intended message in said text becomes subject to the way that the reader perceives it. In general it is an application of discourse analysis at the much broader level of text, rather than just a sentence or word.

Main aspects:

Intentionality - concerns the text producer's attitude and intentions as the text producer uses cohesion and coherence to attain a goal specified in a plan. Without cohesion and coherence, intended goals may not be achieved due to a breakdown of communication. However, depending on the conditions and situations in which the text is used, the goal may still be attained even when cohesion and coherence are not upheld.

Acceptability - concerns the text receiver's attitude that the text should constitute useful or relevant details or information such that it is worth accepting. Text type, the desirability of goals and the political and sociocultural setting, as well as cohesion and coherence are important in influencing the acceptability of a text. In short, that's the way how we react and interact with what we read

Informativity - concerns the extent to which the contents of a text are already known or expected as compared to unknown or unexpected. No matter how expected or predictable content may be, a text will always be informative at least to a certain degree due to unforeseen variability. The processing of highly informative text demands greater cognitive ability but at the same time is more interesting. The level of informativity should not exceed a point such that the text becomes too complicated and communication is endangered. Conversely, the level of informativity should also not be so low that it results in boredom and the rejection of the text.

Intertextuality - concerns the factors which make the utilization of one text dependent upon knowledge of one or more previously encountered text. If a text receiver does not have prior knowledge of a relevant text, communication may break down because the understanding of the current text is obscured. Texts such as parodies, rebuttals, forums and classes in school, the text producer has to refer to prior texts while the text receivers have to have knowledge of the prior texts for communication to be efficient or even occur.

https://en.wikipedia.org/wiki/Text_linguistics#Acceptability

Main aspects:

Intentionality - concerns the text producer's attitude and intentions as the text producer uses cohesion and coherence to attain a goal specified in a plan. Without cohesion and coherence, intended goals may not be achieved due to a breakdown of communication. However, depending on the conditions and situations in which the text is used, the goal may still be attained even when cohesion and coherence are not upheld.

Acceptability - concerns the text receiver's attitude that the text should constitute useful or relevant details or information such that it is worth accepting. Text type, the desirability of goals and the political and sociocultural setting, as well as cohesion and coherence are important in influencing the acceptability of a text. In short, that's the way how we react and interact with what we read

Informativity - concerns the extent to which the contents of a text are already known or expected as compared to unknown or unexpected. No matter how expected or predictable content may be, a text will always be informative at least to a certain degree due to unforeseen variability. The processing of highly informative text demands greater cognitive ability but at the same time is more interesting. The level of informativity should not exceed a point such that the text becomes too complicated and communication is endangered. Conversely, the level of informativity should also not be so low that it results in boredom and the rejection of the text.

Intertextuality - concerns the factors which make the utilization of one text dependent upon knowledge of one or more previously encountered text. If a text receiver does not have prior knowledge of a relevant text, communication may break down because the understanding of the current text is obscured. Texts such as parodies, rebuttals, forums and classes in school, the text producer has to refer to prior texts while the text receivers have to have knowledge of the prior texts for communication to be efficient or even occur.

https://en.wikipedia.org/wiki/Text_linguistics#Acceptability

NSFnet

The National Science Foundation Network (NSFNET) is a former programme of coordinated projects sponsored by the National Science Foundation (NSF) to develop an advanced research network, which eventually gave rise to several nationwide backbone networks to support the NST's initiative from 1986 to 1995, becoming a major internet backbone with enough public and private funding.

ARPANET

ARPANET is an acronym that stands for Advanced Research Projects Agency Network and it was an early packet switching network and the first one to implement the concept of the TCP/IP stack, originally an effort by a small research group from the MIT. ARPANET was initially funded by the Advanced Research Projects Agency (ARPA) of the United States Department of Defense. Packet switching succeded the idea of circuit switching for voice and data communications based on end to end communication. The (temporarily) dedicated line is typically composed of many intermediary lines which are assembled into a chain that stretches all the way from the originating station to the destination station. With packet switching, a data system could use a single communication link to communicate with more than one machine by collecting data into datagrams and transmitting these as packets onto the attached network link, as soon as the link becomes idle.

Friday, 20 November 2015

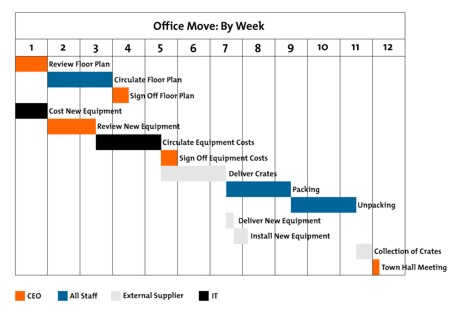

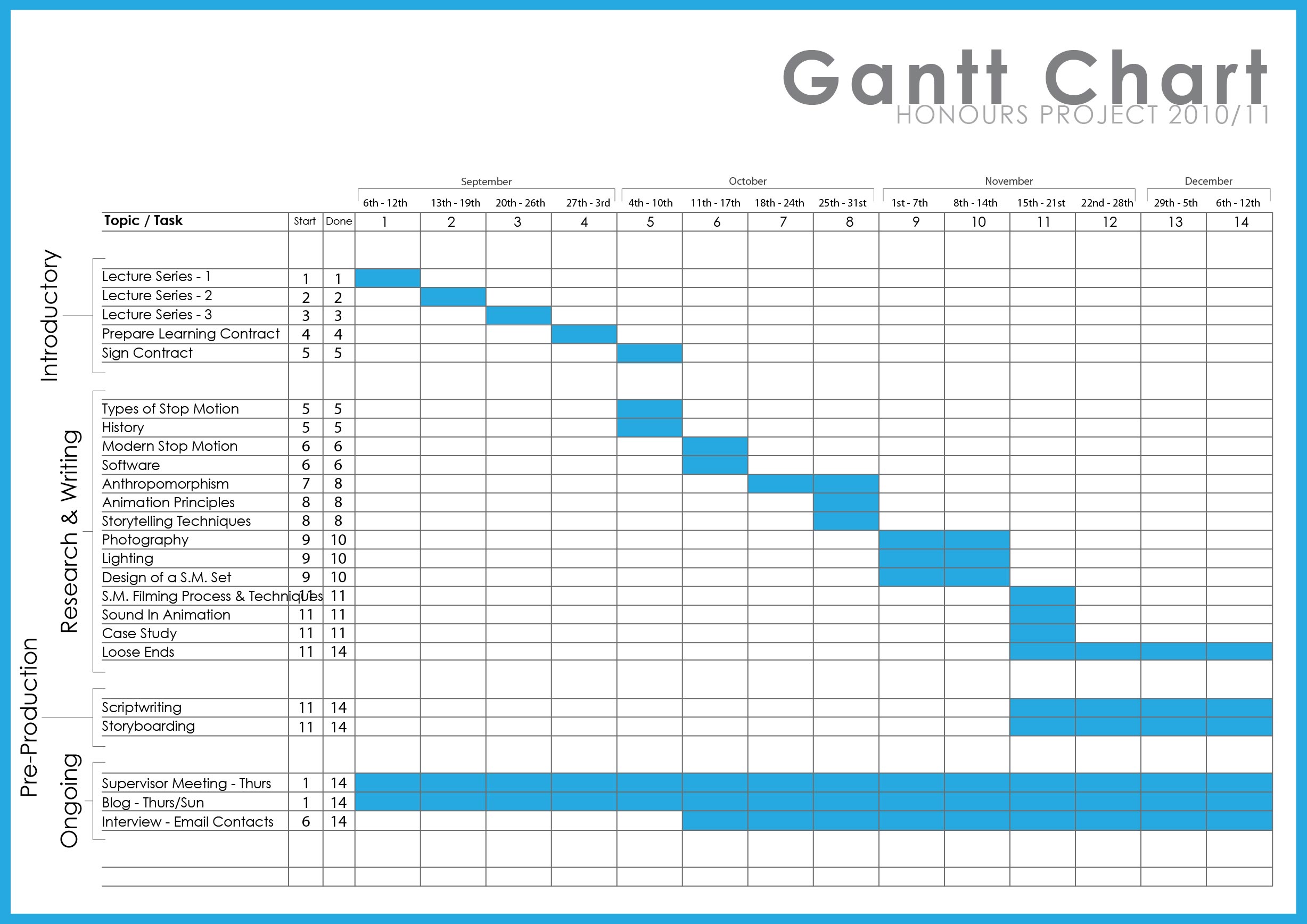

Gantt Chart

A Gantt chart is a project management tool for project activities schedule which consists of a type of bar chart. Gantt charts illustrate the start and finish dates of the work breakdown structure (the terminal and summary elements) of a project, as well as the precedence network of the activities therein (dependency). Gantt charts can be used to show current schedule status using percent-complete shadings.

Among the advantages of a gantt chart is the possibility of visualising the duration of every activity through a relationship of start to finish to every activity in the course of the project.

Examples of Gantt chart:

Among the advantages of a gantt chart is the possibility of visualising the duration of every activity through a relationship of start to finish to every activity in the course of the project.

Examples of Gantt chart:

| http://www.vertex42.com/ExcelTemplates/excel-gantt-chart.html |

|

| https://www.mindtools.com/pages/article/newPPM_03.htm |

|

| https://mechanicalsympathy.wordpress.com/2010/10/25/gantt-chart-v1-1/ |

Sunday, 15 November 2015

Project Scope

Project Scope involves getting information required to start a project, and the features the project would have that would meet its stakeholders requirements.

In project management, the term scope has two distinct uses- Project Scope and Product Scope, with the former consisiting of the work that needs to be accomplished to deliver a product, service, or result with the specified features and functions.

An essential element of any project, project managers use the project scope as a written confirmation of the results your project will produce and the constraints and assumptions under which you will work. Both the people who requested the project and the project team should agree to all terms in the project scope before actual project work begins. In order to appeal to the main stakeholders, it should usually include the following information:

Justification: A brief statement regarding the business need your project addresses. (Save the more detailed justification for the project charter.)

Product scope description: The characteristics of the products, services, and/or results your project will produce.

Acceptance criteria: The conditions that must be met before project deliverables are accepted. In order words, your project should follow standards before the stakeholders receive the end result of your project.

Deliverables or objectives: The products, services, and/or results your project will produc.

Project Exclusions: Statements about what the project will not accomplish or produce.

Constraints: Restrictions that limit what you can achieve, how and when you can achieve it, and how much achieving it can cost.

Assumptions: Statements about how you will address uncertain information as you conceive, plan, and perform your project.

Notice that Project Scope is more work-oriented, (the hows,) while Product Scope is more oriented toward functional requirements. (the whats.) (save it for last)

Project Charter vs Project Scope:

At first glimpse both project charter and project scope seem to address the same thing. Both provide a baseline for managing and executing a project while showcasing the schedule and resources. However, bear in mind that it's the Project Charter that acknowledges the existence of a project. This means that if you only turn up the project scope without an accompanying Project Charter, your Project Scope won't be be taken seriously. Your stakeholders have to approve your Project Charter before you get ahead in the project. Moreover, without a project charter, your customer may mistakenly assume you're responsible for petty issues that are otherwise beyond your responsibilities. In order to establish in a clear way what you're supposed to do and what the project is supposed to accomplish, you should specify so in the project charter, which should be designed by the corporate executive or sponsor. The project deliverables and its objectives are statd in the Project Scope, which can only be drawn up AFTER the approval of the Project Charter.

The project scope is developed by the Project Manager with his team members.

http://www.dummies.com/how-to/content/what-to-include-in-a-project-scope-statement.html

http://www.cio.com.au/article/401353/how_define_scope_project/

Project Charter

The Project Charter is a document that confirms the existence of a formal project. It's a statement of the scope, objectives and participants of the project, providing a delineation of roles and responsibilities, identifying the main stakeholders and defining the authority of the project manager.The project charter is usually a short document that refers to more detailed documents such as a new offering request or a request for proposal. Projects as described in the Project Charter can be ranked and authorized by Return on Investment.

In Initiative for Policy Dialogue (IPD), this document is known as the project charter. In customer relationship management (CRM), it is known as the project definition report. Both IPD and CRM require the Prj Charter as part of the project management process.

The purpose of the project charter is to document:

Reasons for undertaking the project

Objectives and constraints of the project

Directions concerning the solution

Identities of the main stakeholders

In-scope and out-of-scope items

Risks identified early on (A risk plan should be part of the overall project management plan)

Target project benefits

High level budget and spending authority

In Initiative for Policy Dialogue (IPD), this document is known as the project charter. In customer relationship management (CRM), it is known as the project definition report. Both IPD and CRM require the Prj Charter as part of the project management process.

The purpose of the project charter is to document:

Reasons for undertaking the project

Objectives and constraints of the project

Directions concerning the solution

Identities of the main stakeholders

In-scope and out-of-scope items

Risks identified early on (A risk plan should be part of the overall project management plan)

Target project benefits

High level budget and spending authority

IT Service Management Forum (itSMF)

The IT Service Management Forum (itSMF) is an independent and not-for-profit institution of IT Service Management (ITSM) professionals worldwide. The role of itSMF is to collect, develop and publish "best practice" in addition to support in education and training of ITSM tools and initiate advisory ideas about ITSM and held conventions. The itSMF is concerned with promoting ITIL (IT Infrastructure Library), Best practice in IT Service Management with a strong emphasis on the ISO/IEC 20000 standard. The itSMF publishes books covering various aspects of Service Management through a process of endorsing them as part of the itSMF Library.

Organizations with ties to ITIL:

1- The Office of Government Commerce (OGC) is part of the Efficiency and Reform Group of the Cabinet Office, a department of the Government of the United Kingdom.

2- The Stationery Office (TSO) a privatised publishing arm of Her Majesty's Stationery Office, is the current ITIL publisher.

3- Axelos is the official accreditor for qualifications based on their Best Practice guidance portfolio.

In addition to ITIL, itSMF also encompasses the following topics:

a - ISACA is an international professional association, well known for COBIT (Control Objectives for Information and Related Technology) as framework for IT Management and IT Governance.

b- ISO/IEC 20000 - The international standard for organizations, which will show their performance in ITSM.

c- ISO/IEC 15504, also known as SPICE (Software Process Improvement and Capability Determination) The international standard for capability in information technology process measured with a standard assessment method.

References:

http://www.itsmfi.org/

https://en.wikipedia.org/wiki/IT_Service_Management_Forum

Organizations with ties to ITIL:

1- The Office of Government Commerce (OGC) is part of the Efficiency and Reform Group of the Cabinet Office, a department of the Government of the United Kingdom.

2- The Stationery Office (TSO) a privatised publishing arm of Her Majesty's Stationery Office, is the current ITIL publisher.

3- Axelos is the official accreditor for qualifications based on their Best Practice guidance portfolio.

In addition to ITIL, itSMF also encompasses the following topics:

a - ISACA is an international professional association, well known for COBIT (Control Objectives for Information and Related Technology) as framework for IT Management and IT Governance.

b- ISO/IEC 20000 - The international standard for organizations, which will show their performance in ITSM.

c- ISO/IEC 15504, also known as SPICE (Software Process Improvement and Capability Determination) The international standard for capability in information technology process measured with a standard assessment method.

References:

http://www.itsmfi.org/

https://en.wikipedia.org/wiki/IT_Service_Management_Forum

5S

The 5S is a work design methodoly inherent to the production industry although it has increasingly been applied in the service sector as well which stands for a instrument of workplace organisation to keep it clean and suitable for work practices. Since cleanliness and order are the basic requirements of efficient work processes, the 5S is often used as a tool for continuous improvement. They can be translated from the Japanese as

"sort", "straighten", "shine", "standardise" and "sustain" and they are:

Seiri

https://en.wikipedia.org/wiki/5S_%28methodology%29

Seiri

整理 (Sort)

- keeping only necessary items

- Make work easier by eliminating obstacles.

- Reduce chance of being disturbed with unnecessary items

- Prevent accumulation of unnecessary items

- Segregate unwanted material from the workplace

Seiton

整頓 (Systematic Arrangement)

- Can also be translated as "put in order", "straighten", or "streamline"

- Arrange all necessary items so they can be easily selected for use

- Prevent loss and waste of time

- Ensure first-come-first-served basis

Seiso

清掃 (Shine)

- Can also be translated as "sweep", "sanitize", or "scrub"

- Clean your workplace regularly and thoroughly

- Prevent machinery and equipment deterioration

Seiketsu

清潔 (Standardize)

- Standardise the best practices in the work area.

- Maintain high standards of housekeeping and workplace organisation at all times.

- Everything in its right place.

Shitsuke

躾 (Sustain)

- Also translates as "do without being told"

- Perform regular audits

- Enforce constant training and discipline

https://en.wikipedia.org/wiki/5S_%28methodology%29

Process Maps/ Flowcharts

Process Maps (also called process flowchart, process flow diagram, macro flowchart, top-down flowchart, detailed flowchart, deployment flowchart or several-leveled flowchart), is a visual depiction of how processes relate to each other, including the relationship between inputs and outputs and where key decision points lie. Elements that may be included are: sequence of actions, materials or services entering or leaving the process (inputs and outputs), decisions that must be made, people who become involved, time involved at each step and/or process measurements. The process described can be a manufacturing, administrative or service or a project plan.

When to Use a Flowchart:

1 To develop understanding of how a process is done.

2 To study a process for improvement.

3 To communicate to others how a process is done.

4 When better communication is needed between people involved with the same process.

5 To document a process.

6 When planning a project.

Examples:

Direction of flow from one step or decision to another.

Direction of flow from one step or decision to another.

Decision based on a question. The question is written in the diamond.

More than one arrow goes out of the diamond, each one showing

the direction the process takes for a given answer to the question.

(Often the answers are “ yes” and “ no.”)

Decision based on a question. The question is written in the diamond.

More than one arrow goes out of the diamond, each one showing

the direction the process takes for a given answer to the question.

(Often the answers are “ yes” and “ no.”)

Delay or wait

Delay or wait

Link to another page or another flowchart. The same symbol on the other page indicates that the flow continues there.

Link to another page or another flowchart. The same symbol on the other page indicates that the flow continues there.

Input or output

Input or output

Document

Document

Alternate symbols for start and end points

Alternate symbols for start and end points

References:

ASQ. What is a Process Flowchart? <http://asq.org/learn-about-quality/process-analysis-tools/overview/flowchart.html> Retrieved 15/11/2015.

BARRY, Carly.My Favourtite Quality Tool: Process Mapping <http://blog.minitab.com/blog/real-world-quality-improvement/my-favorite-quality-tool-process-mapping> 2013. Retrieved 15/11/2015.

When to Use a Flowchart:

1 To develop understanding of how a process is done.

2 To study a process for improvement.

3 To communicate to others how a process is done.

4 When better communication is needed between people involved with the same process.

5 To document a process.

6 When planning a project.

Examples:

High–Level Flowchart for an Order-Filling Process

Detailed Flowchart

Commonly Used Symbols in Detailed Flowcharts

One step in the process; the step is written inside the box. Usually, only one arrow goes out of the box.

References:

ASQ. What is a Process Flowchart? <http://asq.org/learn-about-quality/process-analysis-tools/overview/flowchart.html> Retrieved 15/11/2015.

BARRY, Carly.My Favourtite Quality Tool: Process Mapping <http://blog.minitab.com/blog/real-world-quality-improvement/my-favorite-quality-tool-process-mapping> 2013. Retrieved 15/11/2015.

7 tools of quality

The seven quality tools were first proposed by Kaoru Ishikawa due to their indisplensable nature and all-around use not only among quality management professionals but for just about anyone. They are:

Its simplicity allows for great accuracy when it's necessary to evaluate the efficiency of a process. The standard procedure for using a check sheet is drawing a matrix with columns and rows and record the behaviour of data by making check marks in the fields (the defining characteristic of a check sheet), which should be clearly labelled to reflect the assessed process on the form. Test the check sheet for a short trial period to be sure it collects the appropriate data and keeping track of each time the targeted event or problem occurs, recording data on the check sheet. The checks on the check sheet are usually made to indicate defects or flaws from an observed production system.

Function

3- Control Charts: Also known as Shewhart's charts or process-bhaviour charts, are graphs used to spot changes in processes over time. It's a tool typical of statistical process control (hence why it's named after Shewhart, since the man has a lot to do with statistical control to achieve quality). Control charts are used to measure whether a process is under control. By being in control it's meant that the process is stable, with predictable variations that are inherent to the process, with no ensuing degradation. If analysis of the process reveals that it's not in control, the chart helps track the source of the variation.A process that meets agreed upon standards of stability but operating outside of specified limits (e.g., scrap rates may be in statistical control but above desired limits) a deliberate effort to understand the causes of the faulty performance means an increase in performance improvement. The goal of the control chart is the evaluation of processes through chronological quality stability.

4 - Histogram: for frequency of numerical values. It consists of a display where the data is grouped into ranges and then plotted as bars. To construct a histogram, the first step is to "bin" the range of values—that is, divide the entire range of values into a series of intervals—and then count how many values fall into each interval. The bins are usually specified as consecutive, non-overlapping intervals of a variable. The bins (intervals) must be adjacent, and are usually equal size.

Histograms are often confused with bar charts. A histogram is used for continuous data, where the bins represent ranges of data, and the areas of the rectangles are meaningful (rectangles touching each other indicates data continuity, thus the gaps between bars matter), while a bar chart is a plot of categorical variables and the discontinuity should be indicated by having gaps between the rectangles, from which only the length is meaningful. Often this is neglected, which may lead to a bar chart being confused for a histogram.

5- Pareto Chart: Pareto is a widely known concept used to explain which causes are responsible for the biggest consequences. The classical definition is that 20% of the factors are responsible for 80% of the effects. Pareto diagram is a column chart where single values are arranged according to their significance. The greatest values are on the far left while to the right are the smaller values, the lengths of which represent frequency or cost (time or money). The Pareto chart is used to identify the root-causes that most influence the occurrence of problems.

6- Scatter diagram (also called scatter plot)- Graphs pairs of numerical data, one variable on each axis, to look for a relationship. It uses carthesian coordinates to represent two variables for a set data. Two variables is typically used as use of thsi tool is supposed to look for a relationhsip between the compared values. Colour-coding the points allows for more variables.

A scatter plot can be used either when one continuous variable that is under the control of the experimenter and the other depends on it or when both continuous variables are independent. If a parameter exists that is systematically incremented and/or decremented by the other, it is called the control parameter or independent variable and is customarily plotted along the horizontal axis. The measured or dependent variable is customarily plotted along the vertical axis. If no dependent variable exists, either type of variable can be plotted on either axis and a scatter plot will illustrate only the degree of correlation (not causation) between two variables.

In computational statistics, stratified sampling is a method of variance reduction when Monte Carlo methods are used to estimate population statistics from a known population.

References:

https://en.wikipedia.org/wiki/Ishikawa_diagram

https://en.wikipedia.org/wiki/Check_sheet

https://en.wikipedia.org/wiki/Control_chart

https://en.wikipedia.org/wiki/Histogram

https://en.wikipedia.org/wiki/Pareto_chart

https://en.wikipedia.org/wiki/Scatter_plot

https://en.wikipedia.org/wiki/Stratified_sampling

http://www.itl.nist.gov/div898/handbook/pmc/pmc.htm

http://www.astroml.org/user_guide/density_estimation.html

http://www.psychwiki.com/wiki/What_is_a_scatterplot%3F

- Cause and effect diagram (also called Ishikawa or fishbone chart):

Identifies possible causes for an effect or problem and sorts

ideas into useful categories. It consists of first thinking of the root-cause or the effect, then ideas are grouped in order to explain this effect or what is causing this problem into major categories to identify these sources of variation, whic typically include:People: Anyone involved with the processMethods: How the process is performed and the specific requirements for doing it, such as policies, procedures, rules, regulations and lawsMachines: Any equipment, computers, tools, etc. required to accomplish the jobMaterials: Raw materials, parts, pens, paper, etc. used to produce the final productMeasurements: Data generated from the process that are used to evaluate its qualityEnvironment: The conditions, such as location, time, temperature, and culture in which the process operatesThe fishbone has an ancillary benefit as well. Because people by nature often like to get right to determining what to do about a problem, this can help bring out a more thorough exploration of the issues behind the problem – which will lead to a more robust solution.

Its simplicity allows for great accuracy when it's necessary to evaluate the efficiency of a process. The standard procedure for using a check sheet is drawing a matrix with columns and rows and record the behaviour of data by making check marks in the fields (the defining characteristic of a check sheet), which should be clearly labelled to reflect the assessed process on the form. Test the check sheet for a short trial period to be sure it collects the appropriate data and keeping track of each time the targeted event or problem occurs, recording data on the check sheet. The checks on the check sheet are usually made to indicate defects or flaws from an observed production system.

Check sheets typically employ a heading that answers the Five Ws:

Who filled out the check sheet

What was collected (what each check represents, an identifying batch or lot number)

Where the collection took place (facility, room, apparatus)

When the collection took place (hour, shift, day of the week)

Why the data were collected

Function

Kaoru Ishikawa identified five uses for check sheets in quality control:

To check the shape of the probability distribution of a process

To quantify defects by type

To quantify defects by location

To quantify defects by cause (machine, worker)

To keep track of the completion of steps in a multistep procedure (in other words, as a checklist)

The Four Process States

Processes fall into one of four states: 1) the ideal, 2) the threshold, 3) the brink of chaos and 4) the state of chaos (Figure 1).

When a process operates in the ideal state, that process is in statistical control and produces 100 percent conformance, being predictable and meeting customer expectations. This state is only confirmed after the process has been under observation over a set period of time.

A process that is in the threshold state is still in statistical control even though it produces the occasional nonconformance. This type of process will produce a constant level of nonconformances and exhibits low capability. Although predictable, this process does not consistently meet customer needs.

The brink of chaos state reflects a process that is not in statistical control, but also is not producing defects. The outputs of the process still meet customer requirements, but the unpredictability means the process can produce nonconformances at any moment.

The fourth process state is the state of chaos. Here, the process is not in statistical control and produces unpredictable levels of nonconformance.

Figure 1: Four Process States

Every process falls into one of these states at any given time, but they all tend to gravitate toward the state of chaos. Companies typically begin some type of improvement effort when a process reaches the state of chaos (although arguably they would be better served to initiate improvement plans at the brink of chaos or threshold state). Control charts are used to prevent this natural degradation and steer performance back to the ideal state.

Figure 2: Natural Process Degradation

Elements of a Control Chart

There are three main elements of a control chart as shown in the figure below:

- A control chart begins with a time series graph.

- A central line (X or process location) is added as a visual reference for detecting shifts or trends.

- Upper and lower control limits (UCL and LCL) are calculated from available data and placed halfway from the central line. This is also referred to as process dispersion.

Figure 3: Elements of a Control Chart

Control limits (CLs) ensure time is not wasted looking for unnecessary trouble – the goal of any process improvement practitioner should be to only take action when warranted. Control limits are calculated by:

- Estimating the standard deviation of the sample data

- Multiplying that number by three

- Adding (3 x s.d. to the average) for the UCL and subtracting (3 x d.v. from the average) for the LCL

4 - Histogram: for frequency of numerical values. It consists of a display where the data is grouped into ranges and then plotted as bars. To construct a histogram, the first step is to "bin" the range of values—that is, divide the entire range of values into a series of intervals—and then count how many values fall into each interval. The bins are usually specified as consecutive, non-overlapping intervals of a variable. The bins (intervals) must be adjacent, and are usually equal size.

Histograms are often confused with bar charts. A histogram is used for continuous data, where the bins represent ranges of data, and the areas of the rectangles are meaningful (rectangles touching each other indicates data continuity, thus the gaps between bars matter), while a bar chart is a plot of categorical variables and the discontinuity should be indicated by having gaps between the rectangles, from which only the length is meaningful. Often this is neglected, which may lead to a bar chart being confused for a histogram.

5- Pareto Chart: Pareto is a widely known concept used to explain which causes are responsible for the biggest consequences. The classical definition is that 20% of the factors are responsible for 80% of the effects. Pareto diagram is a column chart where single values are arranged according to their significance. The greatest values are on the far left while to the right are the smaller values, the lengths of which represent frequency or cost (time or money). The Pareto chart is used to identify the root-causes that most influence the occurrence of problems.

6- Scatter diagram (also called scatter plot)- Graphs pairs of numerical data, one variable on each axis, to look for a relationship. It uses carthesian coordinates to represent two variables for a set data. Two variables is typically used as use of thsi tool is supposed to look for a relationhsip between the compared values. Colour-coding the points allows for more variables.

A scatter plot can be used either when one continuous variable that is under the control of the experimenter and the other depends on it or when both continuous variables are independent. If a parameter exists that is systematically incremented and/or decremented by the other, it is called the control parameter or independent variable and is customarily plotted along the horizontal axis. The measured or dependent variable is customarily plotted along the vertical axis. If no dependent variable exists, either type of variable can be plotted on either axis and a scatter plot will illustrate only the degree of correlation (not causation) between two variables.

A scatter plot can suggest various kinds of correlations between variables with a certain confidence interval. For example, weight and height, weight would be on y axis and height would be on the x axis. Correlations may be positive (rising), negative (falling), or null (uncorrelated). If the pattern of dots slopes from lower left to upper right, it indicates a positive correlation between the variables being studied. If the pattern of dots slopes from upper left to lower right, it indicates a negative correlation. A line of best fit (alternatively called 'trendline') can be drawn in order to study the relationship between the variables. An equation for the correlation between the variables can be determined by established best-fit procedures. For a linear correlation, the best-fit procedure is known as linear regression and is guaranteed to generate a correct solution in a finite time.

One of the most powerful aspects of a scatter plot, however, is its ability to show nonlinear relationships between variables. The ability to do this can be enhanced by adding a smooth line such as loess. Furthermore, if the data are represented by a mixture model of simple relationships, these relationships will be visually evident as superimposed patterns.

When to Use a Scatter Diagram:

-When you have paired numerical data.

-When your dependent variable may have multiple values for each value of your independent variable.

-When trying to determine whether the two variables are related, such as…

-When trying to identify potential root causes of problems.

-to determine objectively whether a particular cause and effect are related on a fishbone diagram.

-When determining whether two effects that appear to be related both occur with the same cause.

-When testing for autocorrelation before constructing a control chart.

7 -Stratification - (also called flowchart or run chart) A technique that separates data gathered from a variety of sources so that patterns can be seen (some lists replace “stratification” with “flowchart” or “run chart”). A technique used in combination with other data analysis tools, it uses data from a variety of sources or categories that have been lumped together, separating.

7 -Stratification - (also called flowchart or run chart) A technique that separates data gathered from a variety of sources so that patterns can be seen (some lists replace “stratification” with “flowchart” or “run chart”). A technique used in combination with other data analysis tools, it uses data from a variety of sources or categories that have been lumped together, separating.

When to Use Stratification:

1-Before collecting data.

2-When data come from several sources or conditions, such as shifts, days of the week, suppliers or population groups.

3- When data analysis may require separating different sources or conditions.

Stratification Procedure

Before collecting data, consider which information about the sources of the data might have an effect on the results. Set up the data collection so that you collect that information as well.

When plotting or graphing the collected data on a scatter diagram, control chart, histogram or other analysis tool, use different marks or colors to distinguish data from various sources. Data that are distinguished in this way are said to be “stratified.”

Analyse the subsets of stratified data separately. For example, on a scatter diagram where data are stratified into data from source 1 and data from source 2, draw quadrants, count points and determine the critical value only for the data from source 1, then only for the data from source 2.

References:

https://en.wikipedia.org/wiki/Ishikawa_diagram

https://en.wikipedia.org/wiki/Check_sheet

https://en.wikipedia.org/wiki/Control_chart

https://en.wikipedia.org/wiki/Histogram

https://en.wikipedia.org/wiki/Pareto_chart

https://en.wikipedia.org/wiki/Scatter_plot

https://en.wikipedia.org/wiki/Stratified_sampling

http://www.itl.nist.gov/div898/handbook/pmc/pmc.htm

http://www.astroml.org/user_guide/density_estimation.html

http://www.psychwiki.com/wiki/What_is_a_scatterplot%3F

Tuesday, 10 November 2015

Hoshin Kanri

Hoshin Kanri

Hoshin Kanri (also called Policy Deployment, Management by Policy or Management by planning) is a method for bringing about progress at the strategical, tactial and operational level within a company by ensuring that every employee's activities are coordinated in synchrony according to the company's strategy. If you ever feel that the company's very strategy and goals are omitting your needs or are not meaningful to you, then chances are your company hasn't implemented Hoshin Kanri.

It is not as well-known as the other lean tools. It works best in a well-developed lean culture, where continuous improvement is firmly ingrained at all levels of a company, and any organization can benefit from its core principles:

- Visionary strategic planning (focusing on the things that really matter)

- Catchball (building workable plans through consensus)

- Measuring progress (carefully selecting KPIs that will drive the desired behavior)

- Closing the loop (using regular follow-up to keep progress on track)

Hoshin Kanri relies on a "flattened management framework" where the fewer levels of management the faster the decision making progress resulting from the information gatehred from the closed loop system.

In order to understand Hoshin Kanri, it's important to walk through the following steps:

Step One – Create a Strategic Plan

Hoshin Kanri starts with a strategic plan (e.g. an annual plan) developed by the top management to further the long range goals of the company. This plan should be carefully crafted to address a small number of critical issues. Key items to consider when developing the strategic plan are:

Focus on five goals (or fewer). Drawing up a list of goals may give a false sense of progress, with the more the better. In reality, a goal only expresses intent.

Taking action is what matters. Every company has finite resources and energy coupled with a limited attention span. Focusing on a small number of goals makes success far more likely than dissipating energy across dozens of goals. It's similar to the law of diminishing returns in which writing up more goals won't result in more progress as energy that could be channelled to one endeavour is scattered hastily trying to accomplish more than one can handle.

Effectiveness First: There is a well-known distinction between efficiency and effectiveness: efficiency is doing things right while effectiveness is doing the right things. Strategic goals need to be effective – doing the right things to take the company to the next level.

Evolution vs. Revolution Goals can be evolutionary (incremental goals usually achieved through continuous improvement) or revolutionary (breakthrough changes with dramatic scope). Both entail progress in the form of improvement. It's similar to Hamel's concept of innovation, which can incremental (by changing the way you peform a process) or radical (changing the process altogether).

Top Down Consensus. Top management is responsible for developing the strategic plan but consulting with middle management provides additional perspective and feedback that helps craft stronger, more informed strategies while fostering an atmosphere of shared responsibility and significantly acquire more buy-in from middle management. Careful KPIs. Key Performance Indicators (KPIs) provide the means for tracking progress towards goals. It's important to spare some care when choosing which KPIs will help produce the desirable behaviour without creating perverse incentives, like single-minded pursuit of efficiency at the cost of pushing minor drawbacks to be fixed later.

Own the Goal. a facilitator with the skills and authority to successfully see the goal through to conclusion by removing roadblocks that may be hindering progress and making sure the path to progress is always the one followed.

Step Two – Develop Tactics

Mid-level managers are often tasked with tactics that will best achieve the goals as laid out by top management. One of the most important aspects of this process is "catchball"…a back and forth interaction with top management to ensure understanding of the goals and strategy and tactics are well aligned. Tactics may change so flexibility and adaptability are mighty needs of this process. As a result it is helpful to have regular progress reviews (e.g. monthly), at which time results are evaluated and tactics are reviewed.

Step Three – Take Action

At the plant floor level, supervisors and team leaders work out the operational details to implement the tactics as laid out by mid-level managers. The catchball pattern makes another appearance here as the management levels engage in ongoing exchange with the to ensure alignemnt of the company's strategy. This is where goals and plans are converted into results. This is gemba (the place where real action occurs). Therefore, managers should stay closely connected to activity at this level, even if this means reenacting the notorious business practice of the hovering boss.

Step Four – Review and Adjust

So far the steps have focused on cascading strategic goals down through levels of the company; from top management all the way down to the plant floor. Just as important if the flow of information, which should follow a reverse pattern thus creating a closed loop.

Progress should be tracked continuously and reviewed formally on a regular basis (e.g. monthly). These progress checkpoints provide an opportunity for adjustment of tactics and their associated operational details.

Refereneces:

Hoshin Kanri. Lean Production Retrieved <http://www.leanproduction.com/hoshin-kanri.html>

Hoshin Management. Retrieved <https://de.wikipedia.org/wiki/Hoshin-Management>

Hoshin Kanri (also called Policy Deployment, Management by Policy or Management by planning) is a method for bringing about progress at the strategical, tactial and operational level within a company by ensuring that every employee's activities are coordinated in synchrony according to the company's strategy. If you ever feel that the company's very strategy and goals are omitting your needs or are not meaningful to you, then chances are your company hasn't implemented Hoshin Kanri.

It is not as well-known as the other lean tools. It works best in a well-developed lean culture, where continuous improvement is firmly ingrained at all levels of a company, and any organization can benefit from its core principles:

- Visionary strategic planning (focusing on the things that really matter)

- Catchball (building workable plans through consensus)

- Measuring progress (carefully selecting KPIs that will drive the desired behavior)

- Closing the loop (using regular follow-up to keep progress on track)

Hoshin Kanri relies on a "flattened management framework" where the fewer levels of management the faster the decision making progress resulting from the information gatehred from the closed loop system.

In order to understand Hoshin Kanri, it's important to walk through the following steps:

Step One – Create a Strategic Plan

Hoshin Kanri starts with a strategic plan (e.g. an annual plan) developed by the top management to further the long range goals of the company. This plan should be carefully crafted to address a small number of critical issues. Key items to consider when developing the strategic plan are:

Focus on five goals (or fewer). Drawing up a list of goals may give a false sense of progress, with the more the better. In reality, a goal only expresses intent.

Taking action is what matters. Every company has finite resources and energy coupled with a limited attention span. Focusing on a small number of goals makes success far more likely than dissipating energy across dozens of goals. It's similar to the law of diminishing returns in which writing up more goals won't result in more progress as energy that could be channelled to one endeavour is scattered hastily trying to accomplish more than one can handle.

Effectiveness First: There is a well-known distinction between efficiency and effectiveness: efficiency is doing things right while effectiveness is doing the right things. Strategic goals need to be effective – doing the right things to take the company to the next level.

Evolution vs. Revolution Goals can be evolutionary (incremental goals usually achieved through continuous improvement) or revolutionary (breakthrough changes with dramatic scope). Both entail progress in the form of improvement. It's similar to Hamel's concept of innovation, which can incremental (by changing the way you peform a process) or radical (changing the process altogether).

Top Down Consensus. Top management is responsible for developing the strategic plan but consulting with middle management provides additional perspective and feedback that helps craft stronger, more informed strategies while fostering an atmosphere of shared responsibility and significantly acquire more buy-in from middle management. Careful KPIs. Key Performance Indicators (KPIs) provide the means for tracking progress towards goals. It's important to spare some care when choosing which KPIs will help produce the desirable behaviour without creating perverse incentives, like single-minded pursuit of efficiency at the cost of pushing minor drawbacks to be fixed later.

Own the Goal. a facilitator with the skills and authority to successfully see the goal through to conclusion by removing roadblocks that may be hindering progress and making sure the path to progress is always the one followed.

Step Two – Develop Tactics

Mid-level managers are often tasked with tactics that will best achieve the goals as laid out by top management. One of the most important aspects of this process is "catchball"…a back and forth interaction with top management to ensure understanding of the goals and strategy and tactics are well aligned. Tactics may change so flexibility and adaptability are mighty needs of this process. As a result it is helpful to have regular progress reviews (e.g. monthly), at which time results are evaluated and tactics are reviewed.

Step Three – Take Action

At the plant floor level, supervisors and team leaders work out the operational details to implement the tactics as laid out by mid-level managers. The catchball pattern makes another appearance here as the management levels engage in ongoing exchange with the to ensure alignemnt of the company's strategy. This is where goals and plans are converted into results. This is gemba (the place where real action occurs). Therefore, managers should stay closely connected to activity at this level, even if this means reenacting the notorious business practice of the hovering boss.

Step Four – Review and Adjust

So far the steps have focused on cascading strategic goals down through levels of the company; from top management all the way down to the plant floor. Just as important if the flow of information, which should follow a reverse pattern thus creating a closed loop.

Progress should be tracked continuously and reviewed formally on a regular basis (e.g. monthly). These progress checkpoints provide an opportunity for adjustment of tactics and their associated operational details.

| |

| Mmgt by Deployments basic chart |

Refereneces:

Hoshin Kanri. Lean Production Retrieved <http://www.leanproduction.com/hoshin-kanri.html>

Hoshin Management. Retrieved <https://de.wikipedia.org/wiki/Hoshin-Management>

Monday, 9 November 2015

The 4 eras of Quality Management

The 4 Eras Of Quality Management Management

In today's business environment, quality is the utmost agent of survival assurance in this competitive globalised world. Everyone knows the burden of having to deal with poor quality be it in the form of a subpar performance or substandard products. In an organisational environment, poor quality is exarcebated when the staff are either not used to performing according to established quality standards or underperform on purpose. Definitions of quality abound in academic and corporative circles. The most used ones are:

Conformance to specifications: Measures how well the product or service meets the target and tolerance determined by its designer (Crosby,1979)

Fitness for use: Focuses on how well the product performs its intended function or use (Juran, 1951).

Value for price paid: Is a definition of quality that consumers often use for products or service usefulness (Garvin,1984)

A psychological criterion: Is a subject definition that focuses on the judgmental evaluate on of what constitutes product or service quality (Garvin, 1984).

It becomes clear how quality is linked to how a product or service is judged. To accurate measure quality, judging the features of a product is not enough; the concept of quality has expanded to encompass the work personel, processes, organisational culture and environment behind the design of said prodcu or service. It goes without saying that quality in the manufacturing sector differs from quality in service sector in one basic aspect: a product is something altogether tangible and thus more easy to speak for itself when a transaction with a customer looms, while the service sector is intnagible and therefore business conduction relies more heavily on commercial aspects of the company (e.g.: marketing).

There has been a shift from isolated quality to Total Quality management as the tenets of the new pradigm are entirely different from past management practices. Approaches to quality have followed a trail of refinements over previously held concepts about quality over the last century, with each new approach bringing new discoveries and tools to better translate the concept of quality into the business strategic goals.

The 4 Eras of quality management

The quality management eras provides a basis for an ongoing evolution in quality-oriented management with one school of thought succeding another following the conclusions resulting from practices utilised in the previous era:

1 Quality development through inspection

According to Garvin (1988), the development of quality management started with inspection. The outcome of Industrial Revolution developed specialists who 'inspected' quality in products. Scientific management occurred because of environmental influences providing the basis for the development of quality management inspection.

Executive Summary: focus on Uniformity;base don Industrial revolution; Quality Control in Manufacturing

In today's business environment, quality is the utmost agent of survival assurance in this competitive globalised world. Everyone knows the burden of having to deal with poor quality be it in the form of a subpar performance or substandard products. In an organisational environment, poor quality is exarcebated when the staff are either not used to performing according to established quality standards or underperform on purpose. Definitions of quality abound in academic and corporative circles. The most used ones are:

Conformance to specifications: Measures how well the product or service meets the target and tolerance determined by its designer (Crosby,1979)

Fitness for use: Focuses on how well the product performs its intended function or use (Juran, 1951).

Value for price paid: Is a definition of quality that consumers often use for products or service usefulness (Garvin,1984)

A psychological criterion: Is a subject definition that focuses on the judgmental evaluate on of what constitutes product or service quality (Garvin, 1984).

It becomes clear how quality is linked to how a product or service is judged. To accurate measure quality, judging the features of a product is not enough; the concept of quality has expanded to encompass the work personel, processes, organisational culture and environment behind the design of said prodcu or service. It goes without saying that quality in the manufacturing sector differs from quality in service sector in one basic aspect: a product is something altogether tangible and thus more easy to speak for itself when a transaction with a customer looms, while the service sector is intnagible and therefore business conduction relies more heavily on commercial aspects of the company (e.g.: marketing).

There has been a shift from isolated quality to Total Quality management as the tenets of the new pradigm are entirely different from past management practices. Approaches to quality have followed a trail of refinements over previously held concepts about quality over the last century, with each new approach bringing new discoveries and tools to better translate the concept of quality into the business strategic goals.

The 4 Eras of quality management

The quality management eras provides a basis for an ongoing evolution in quality-oriented management with one school of thought succeding another following the conclusions resulting from practices utilised in the previous era:

1 Quality development through inspection

According to Garvin (1988), the development of quality management started with inspection. The outcome of Industrial Revolution developed specialists who 'inspected' quality in products. Scientific management occurred because of environmental influences providing the basis for the development of quality management inspection.

Executive Summary: focus on Uniformity;base don Industrial revolution; Quality Control in Manufacturing

2 The Statistical Quality Control

In 1931, Walter